Media Server

Video file query, playback, storage and ingest solutionsDigital systems began to be implemented progressively on television from the end of the nineties; They are complex systems that require sophisticated and expensive technology, and they change the way many groups work. In the general channels, the process begins with the daily news area, star programs to which they dedicate a large amount of financial and human resources. Of course, the chains that emerge in the new millennium are digital.

The daily informative programs generate different materials, commonly classified into four main sections:

- Copy of the complete program, as it has been broadcast (parallel aerial copy).

- News, which journalists have edited to produce the newscast.

- Originals (gross or rushes ), recorded by the cameras and that the editors use piecemeal to edit the news.

- Agency materials (Reuters, APTN), exchanges with other televisions, institutional signals, etc., the fragments of which are used by the editors to edit the news.

The fruit of television is broadcasting; For this reason, for international organizations such as Fédération Internationale des Archives de Télevision (FIAT), the broadcast is the material that must be preserved by excellence. In all cases, the material produced by the company itself is the priority preservation material, compared to the material acquired from third parties, and for which it does not hold the exploitation rights. Collecting previously recorded broadcast material, or recorded live, has never presented difficulties for documentation services; but in addition, in digital systems this recovery is automated, digital files include production and broadcast metadata and can be accompanied by other elements of interest, such as program rundowns (Martínez; Mas, 2010; Andérez, 2009).

However, not all archives preserve the material broadcast in the news programs, and some television channels base their documentary exploitation policy on the camera originals and signals received. In analog systems, the retrieval of originals by the documentation service has always been complex: tapes were delivered without information, often weeks or months after they were recorded, or they were not delivered (Giménez, 2007). In digital systems this changes radically, since the camera originals, as well as all kinds of signals received (news from the agency, exchange, etc.), are available to all interested groups from the moment they are ingested, and documentary makers can select, clean and save these recordings for cataloging and analysis.

But digitization has also brought urgency to selection work, and does not completely solve the lack of information on camera originals, especially when the intake task corresponds to the Documentation Service (Aguilar Gutiérrez; López de Solís, 2010). In addition, an archive based on originals continues to require complex and expensive selection tasks in human resources (Giménez, 2007; Hidalgo, 2003; Selection group , 2004).

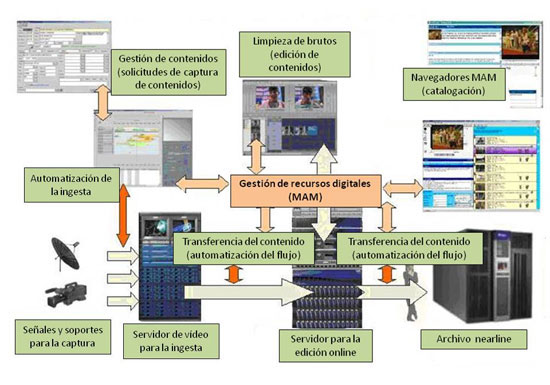

With the digitization of television production centers, the signals from the memory cards of the ENGs, from the satellite or fiber optic links are encoded in the ingest video server, and converted into computer files. These files will be transferred to an online server so that journalists (and documentary makers too) can access this content, edit it and generate a news item.

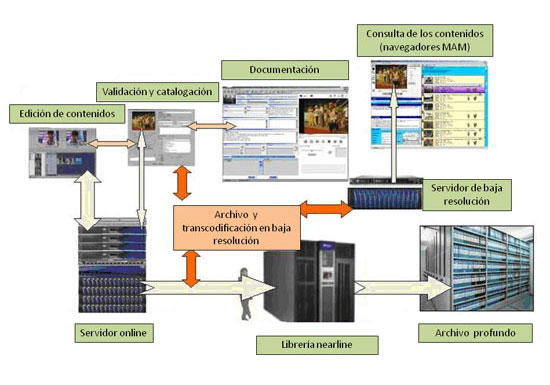

Journalists look for the content they need to do their job in the browser; if that content is in the online file, a low resolution server will provide the image to you; On the other hand, if the content is in the & nbsp; nearline library, there will be an automatic transfer from the library (or file & nbsp; nearline ) to this server. When the journalist finishes his work, the content publication process begins with the generation of the new cut in the editor itself ( fuse ). Automatically, this news will go to a video server of & nbsp; play out & nbsp; or broadcast, related to the production of the studio and the schedule of the program, for its presentation live from the set, with the different news that have been produced during the previous editing process.

Simultaneously with the publication of the content, automatic processes are triggered so that the new news is archived in the & nbsp; nearline library, and transcoding processes are triggered that generate the new low resolution of that content. From there, the entire organization can view that content. At the end of the process, when the content has been archived, the copies available on hard disk servers will have expired, a high resolution copy will remain in the library and a low resolution version will remain on the low resolution server. .

The audiovisual content management system ( Media Asset Management ) facilitates automatic processes for the management of digital storage, ensuring that during the entire production process exactly where the content is, without anyone have to worry about it. As Koldo Lizarralde (2009), deputy director of Engineering at ETB, points out, the automatic cleaning of the servers are managed properly thanks to the fact that the previous catalogs are correct, because the contents must be kept different times on each server depending on its format. If it is correctly cataloged by formats, clearly distinguishing that this is a news item, an edition cut, a television program, etc., all these automatic processes will be triggered in an efficient way. This author adds that one of the great difficulties that they are experiencing, during the process of years that is the digitization of a station like ETB, is that the information at source has to be as reliable as possible and there should be no errors in the classification of the contents so that the automatic processes are completed in a reliable way.

CD MX (55) 4125 0121 - Email:

CD MX (55) 4125 0121 - Email: